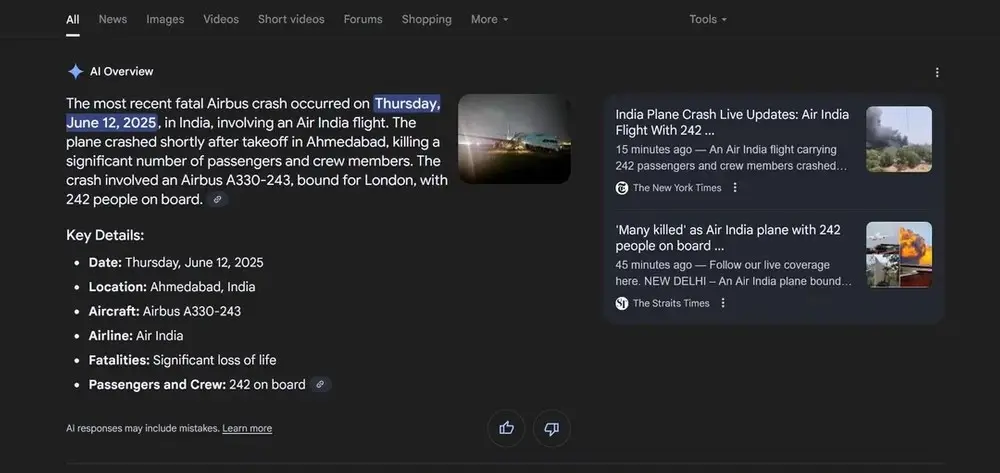

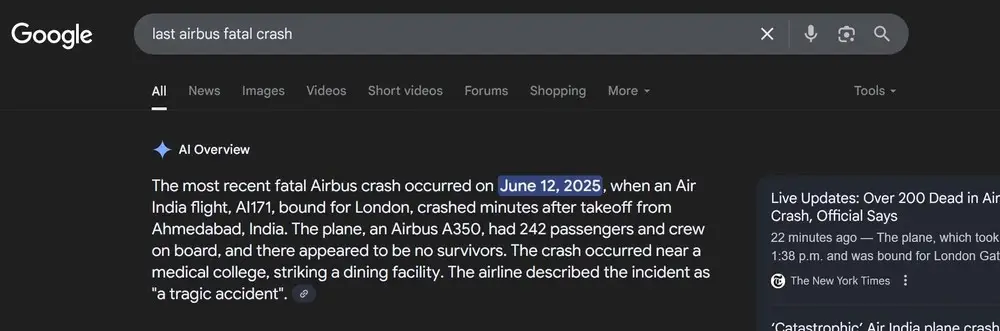

One particularly egregious error was made by Google Search’s AI Overview feature, which listed an Airbus A330-243 as the aircraft involved in the Air India Flight 171 crash—which claimed the lives of 241 people on board and at least 28 on the ground—instead of the Boeing 787 Dreamliner that actually crashed. At least one Reddit user documented the error by sharing a screenshot of the inaccurate snippet that showed no indications of manipulation. Air India Disaster

One user discovered that AI Overview linked the Air India crash to an Airbus A330 while searching for “accidents involving Airbus aircraft,” citing entirely false information. Subsequent testing showed an even more disorganized picture: the AI responses occasionally identified Boeing, occasionally identified Airbus, and occasionally even identified a perplexing combination of the two, depending on the search variables.Air India Disaster

It is difficult to determine the cause of these disparities. Because Google’s generative algorithms are non-deterministic, they can produce various results even when given identical inputs. One explanation is that during the automatic synthesis phase, the AI recorded references to Airbus as Boeing’s primary rival, which were found in numerous articles about the accident, and derived erroneous conclusions from them.

A Brief Overview of AI of Air India Disaster

In 2024, Google Search introduced AI Overview, and it has recently become available in Europe and Italy. According to the company, it appears in the search results “when our systems determine that generative AI can be particularly useful, for example, to quickly understand information from a range of sources.” The user may interpret the summary the system generates at the start of the results as accurate because it frequently uses confident and assertive language.

A warning formula is mentioned at the end of the snippet and on the official support page: “AI responses may contain errors” and “although exciting, this rapidly and continuously evolving technology may provide inaccurate or offensive information.” However, a disclaimer at the bottom of the page is totally inadequate in a situation like a plane crash, where the manufacturer’s attribution is a crucial detail to comprehend the dynamics of the facts and industrial responsibilities.

THE IMPLICATIONS AND HISTORIES

The mistake affects reputation in addition to information. Involving Airbus in a Boeing-related disaster would skew public opinion and undermine the accuracy standard that ought to guide news reporting. However, the mistake might provide Boeing with involuntary cover. Boeing is already facing pressure from a long list of serious problems pertaining to its aeronautical portfolio, particularly the 737 line.

AI Overview has previously played a leading role in comparable circumstances. Some incorrect responses, such as the suggestion to use glue to improve the adhesion of mozzarella to pizza or the suggestion to eat a small stone daily to improve digestion, which was lifted from a satirical article in The Onion, went viral during the testing phase in the United States in 2024. Tragic and actual events cannot be compared to those instances where the information’s absurdity was obvious.

PROBABILISTIC INFORMATION’S RISK

The episode highlights a key risk of systems based on large linguistic models (LLMs): they can give answers that are wrong, incomplete, or misleading while still sounding reasonable. Even when presented with accurate sources, AI can deviate from its intended course due to its inability to fully comprehend the context and meaning. Artificial intelligence errors spread silently but capillarily with the force of automation and the speed of indexing, in contrast to traditional printing errors that are signed, verifiable, and frequently confined.

There is a very high chance that the information will become probabilistic rather than factual when it is introduced into the mechanism that is now based on the social behavior of “I found it on Google.” In certain instances, the mistakes made by traditional journalism are even more severe because they are concealed by an air of technical neutrality.

GOOGLE’S REACTION

Google informed Ars Technica that it had manually deleted the inaccurate response from AI Overview in response to the report. The business said in a formal statement:

“Like all search features, we are constantly refining our systems and updating them with examples like this. The response is no longer visible. The accuracy rate of AI overviews is comparable to that of other features, such as featured snippets, and we uphold high standards for all search features.

The statement underscores the responsiveness of the search team, yet it fails to tackle the issue of information accountability. The weak link between AI and editorial control in the current model is demonstrated by the fact that a similar error was only fixed following user intervention and press coverage. Thus, this case raises additional concerns about the validity of AI in search at a time when Google is pushing for its global expansion. Naturally, users are advised to always expand their information searches and never take anything produced by an AI for granted.